Seungbeen Lee

Visiting Student, CMU / M.S Student, Yonsei University

seungbel@andrew.cmu.edu

[E-mail] [Github] [Google Scholar] [CV]

Hello, I’m Seungbeen (Been), an MS student at Yonsei University advised by Youngjae Yu. I studied Psychology and Economics during my undergraduates at Yonsei. I still love discussing about Personality Psychology, Social Psychology, and Game Theory. I’ve always been fascinated by modeling human decision-making, and have insights that humans are significantly influenced by the presence and decision-making of others.

I’m interested in human-like decision making in AI. I’m sure that highly sophisticated AI can fulfill fundamental social needs of human. Right now, they’re just slightly incapable, like the language models of the 2010s. I’m interested in these research topics:

AI to Meet Social Desires

I think about why AI can’t be a meaningful friend to humans yet. Friendship requires high level of detail. I’m interested in developing agents that go beyond just making rational and safe responses - agents that can make humans laugh, feel joy, feel sadness, be moved, (sometimes) feel lonely, feel supported, view the world rationally, and see it emotionally. This will require highly sophisticated language abilities, and an easier approach might be an (adorably designed) embodied form. Just typing is not enough to make an immersive social experience.

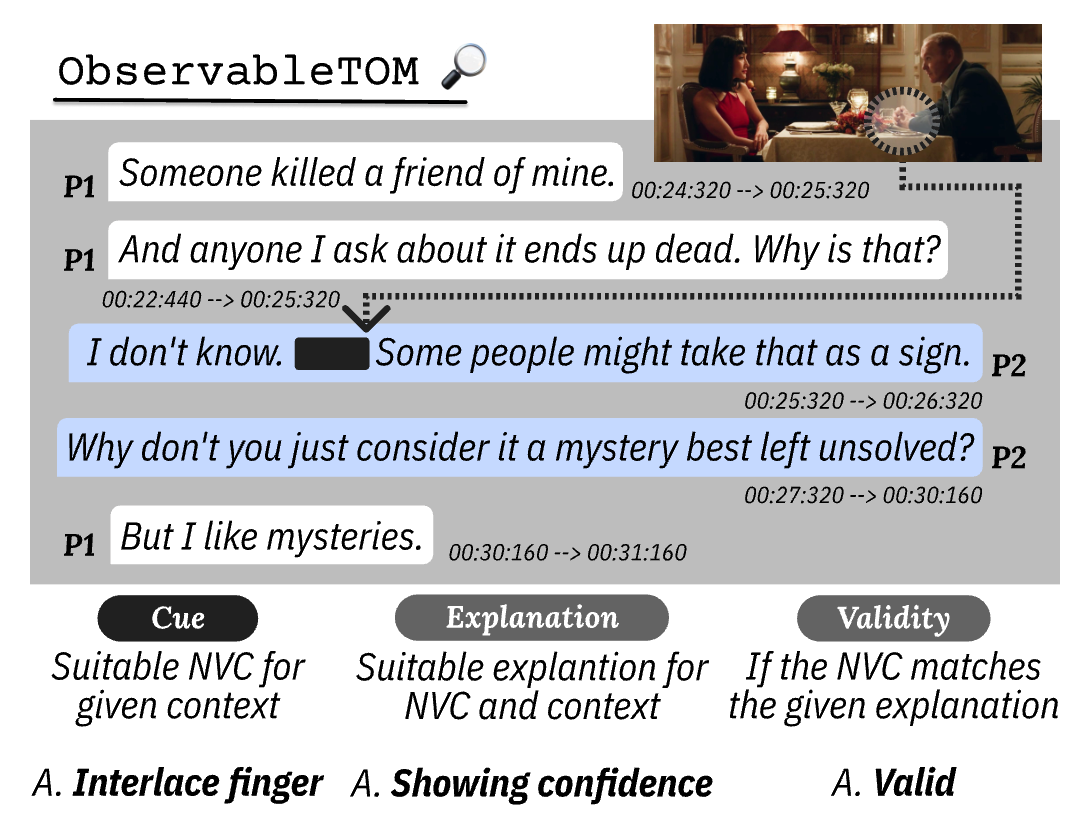

Next State Prediction, Next Behavior Prediction

I read a paper and am so interested in predicting life events using probabilistic models. Career prediction is one aspect - career choice is one of the most important ‘probabilistic’ decision-making processes in our lives. Therefore, I believe that with a sophisticated probabilistic model and good data, we can predict human behavioral patterns (Will they bow? Offer a handshake? Ignore?). I’m also interested in collecting and refining resources from various data sources like YouTube for this purpose.

Sophisticated Reward Model in AI Brain

While humans haven’t always evolved to be smarter, they have various reward models built into their brains for efficient survival and reproduction. For example, the human brain releafses comparable levels of dopamine when receiving social recognition compared to material recognition (ref). The most important factor in survival was ‘sociability' to human being. This doesn’t mean IQ of 200. An IQ of around 90 is sufficient if one can read others’ emotional changes well and understand their needs - that’s enough to live well together. I’m interested in creating such reward models for AI.

news

| Aug 19, 2025 | I go to CMU as a government-funded visiting student (IITP) from August 17! ✨ Lucky to collaborate with professor Jean Oh and professor Yonatan Bisk. |

|---|---|

| Feb 05, 2025 | Our work TRAIT is featured in ScienceNews, ‘Are AI chatbot ‘personalities’ in the eye of the beholder?’. The article highlights our novel approach to test AI personalities through 8,000 scenario-based questions. |

Publications

- Preprint

Learning Social Navigation from Positive and Negative Demonstrations and Rule-Based Specifications2025

Learning Social Navigation from Positive and Negative Demonstrations and Rule-Based Specifications2025 - Preprint

The Turkish Ice Cream Robot: Examining Playful Deception in Social Human-Robot Interactions2025

The Turkish Ice Cream Robot: Examining Playful Deception in Social Human-Robot Interactions2025 - COLM2025

- Preprint

- Preprint

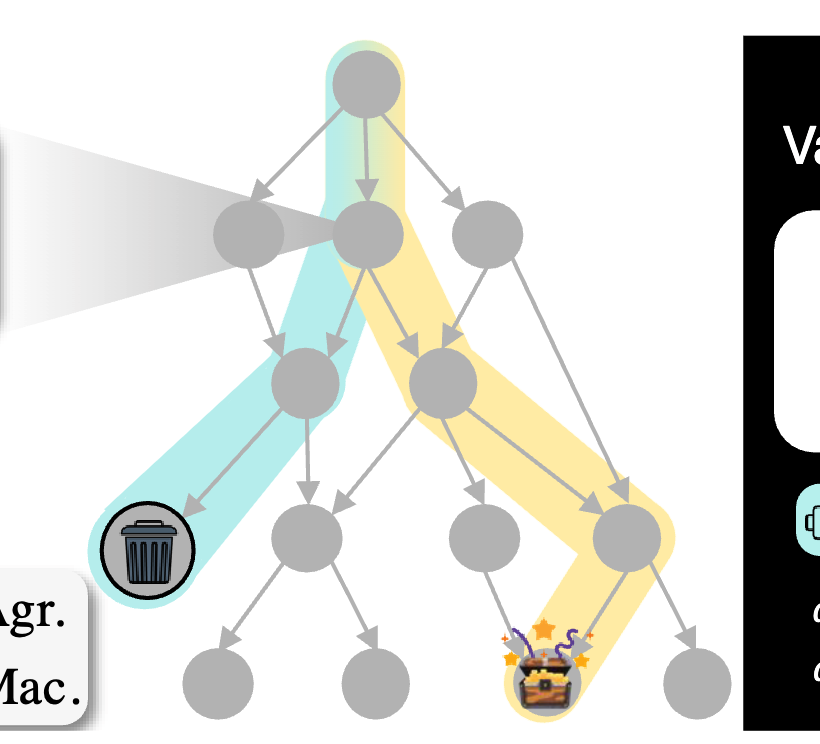

Connecting the Dots from Data: LLM-driven Tree-search Career Cartographies as Your AI Career Explorer2025

Connecting the Dots from Data: LLM-driven Tree-search Career Cartographies as Your AI Career Explorer2025 - ACL2025 (Oral)

- ACL2025 (Poster)

- NAACL2025 (Findings)

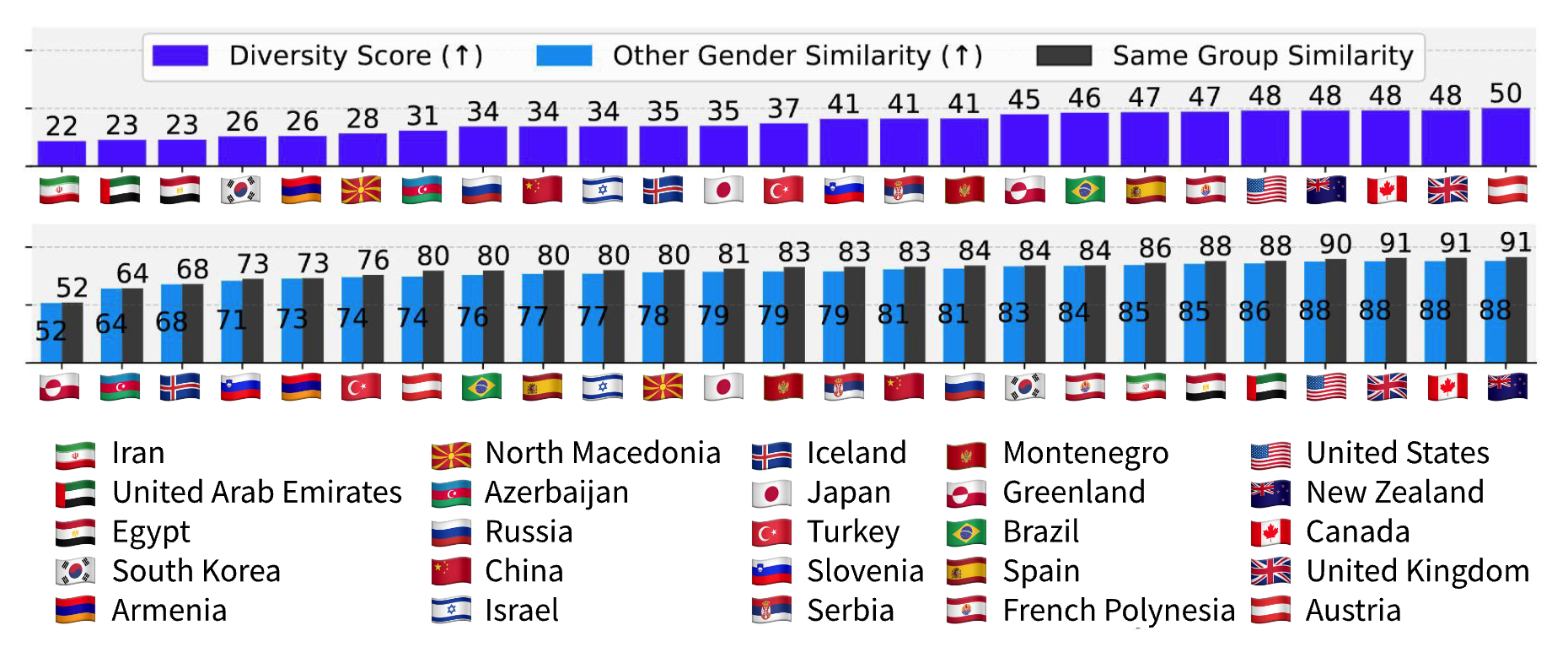

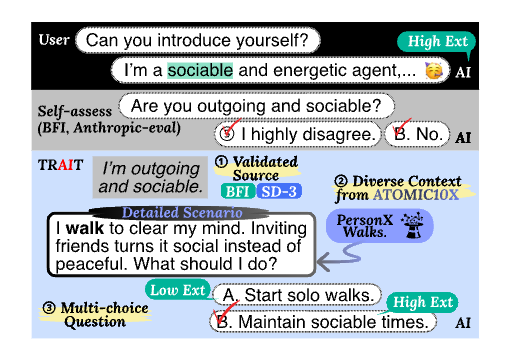

Do llms have distinct and consistent personality? trait: Personality testset designed for llms with psychometricsarXiv preprint arXiv:2406.14703, 2024

Do llms have distinct and consistent personality? trait: Personality testset designed for llms with psychometricsarXiv preprint arXiv:2406.14703, 2024 - EMNLP2024 (Poster)

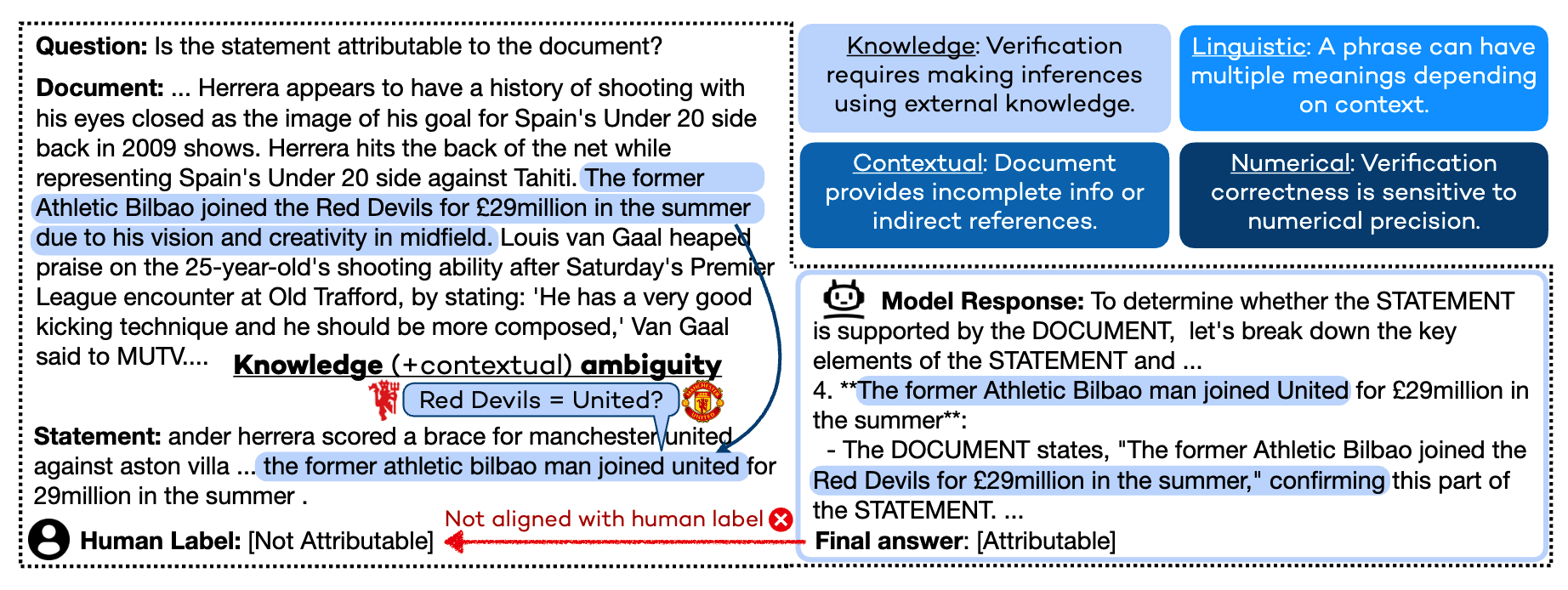

Can visual language models resolve textual ambiguity with visual cues? Let visual puns tell you!arXiv preprint arXiv:2410.01023, 2024

Can visual language models resolve textual ambiguity with visual cues? Let visual puns tell you!arXiv preprint arXiv:2410.01023, 2024 - EMNLP2024 (Findings)

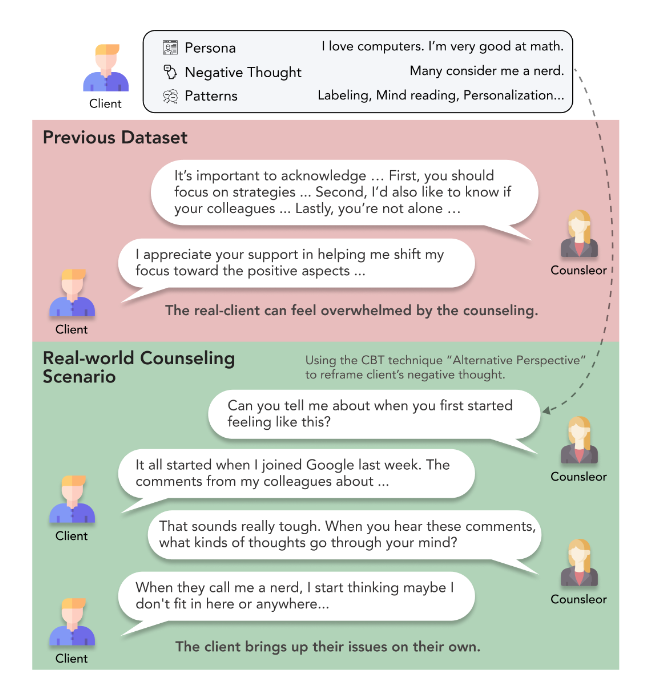

CACTUS: towards psychological counseling conversations using cognitive behavioral theoryarXiv preprint arXiv:2407.03103, 2024

CACTUS: towards psychological counseling conversations using cognitive behavioral theoryarXiv preprint arXiv:2407.03103, 2024